LiDAR maker AEye, based in California, unveiled a software upgrade to its LiDAR platform for ADAS and autonomous vehicle systems. The upgrade, 4Sight+, offers a 20 percent improvement in the sensor range and a 400 percent increase in spatial resolution over their previous generation. Tier 1s, including their partner Continental AG, can implement 4Sight+ to improve path planning at highway speeds, where detecting small objects and vulnerable road users at a distance with high confidence is required. Likewise, 4Sight+ can be used to address hazardous vehicle cut-ins.

What Is Software-Definable LiDAR?

AEye, whose LiDARs work alongside cameras and radars, offers one of the only software-definable families on the market. According to market research and technology analysis firm Yole Group, competitors in the software-definable LiDAR space include Baraja, PreAct, and Lumotive.

For AEye, software-definable means their LiDAR settings – i.e., object revisit rate, instantaneous (angular) resolution, and classification range – can be tuned or reconfigured on the fly to optimize performance and power consumption, depending on the use case.

Alongside the development of software-defined vehicles in which software updates can activate new features or improve existing ones, some companies are developing sensors for which the software can also be updated for further improvements, Pierrick Boulay, a senior technology and market analyst within Yole Group’s Photonics and Sensing division, explained in an interview. “Such updates are impossible for most LiDARs already implemented in cars today,” he said. “So, software-definable LiDAR has been designed so that algorithms can be updated to improve the range and resolution, like AEye, but it could also be related to the algorithms for detecting, identifying, and classifying objects.”

Having a software-definable LiDAR makes it possible to improve its performance during its life on the vehicle, which could be an advantage over other LiDARs, Boulay added. “But for automated driving functionalities, that will not be such a significant advantage in the end,” he said. “For automated driving, the real challenge relies on the fusion of data from sensors like cameras, radars, and LiDARs.”

Boulay explained there is still lots of work to do regarding this type of fusion – i.e., early fusion (using raw data) or late fusion (using object list) – but also regarding the algorithms that make the right decision when sensors do not “see” the same thing. “What to do if the camera classifies an object as a cyclist and the LiDAR as a pedestrian,” he said. “Algorithms related to data fusion to enable a smooth and safe ride are much more critical than a software-defined sensor.”

New Features & SaaS Revenues

AEye’s LiDAR capabilities can be updated or reprogrammed in the field, over the air (OTA), allowing for faster deployment of new features and a quicker path to SaaS revenues. Compared to passive LiDAR systems that scan with fixed patterns at fixed distances, software-definable LiDARs allow OEMs to reconfigure it to their specific and evolving requirements without costly hardware changes. “Improving the capabilities of a LiDAR without the need to change the hardware is a key advantage as it avoids the high cost and time related to new hardware development,” Boulay said.

The ability to update the sensor in real-time and over the air will make a difference for OEMs designing next-gen electric and software-defined vehicles, explained AEye CEO Matt Fisch in an interview. “It allows OEMs to install AEye LiDAR across a vehicle fleet to enhance basic ADAS (L1-L2) functionality, like automatic emergency braking, while refining the algorithms needed to introduce advanced autonomous (L3-L5) features and functionality across all vehicle models over the air, in the future,” he said. “This capability reduces hardware validation costs for OEMs, dramatically reducing time-to-market to introduce new features to consumers.”

High-Speed Object Detection

Increasing spatial resolution by 400 percent is the equivalent of higher-quality image capture, making it easier for vehicles to see smaller objects at high speeds. Similarly, AEye’s 20 percent range improvement means vehicles can receive more information about the road for path planning, including better prediction capabilities at longer distances. One question Yole’s Boulay had was whether AEye increased the vertical or the horizontal resolution or both. In a follow-on interview with AEye, the company said both.

Increasing both is a great improvement, though AEye’s range was already quite good for automotive applications, Boulay explained. “With that [increase], it is possible to detect and identify an object on the road soon enough so that the vehicle can react accordingly,” he said. “Is it an object small enough for the vehicle to drive over? Or is it too big, and the vehicle needs to change direction? Having a fine resolution is vital for the smooth behavior of the car.”

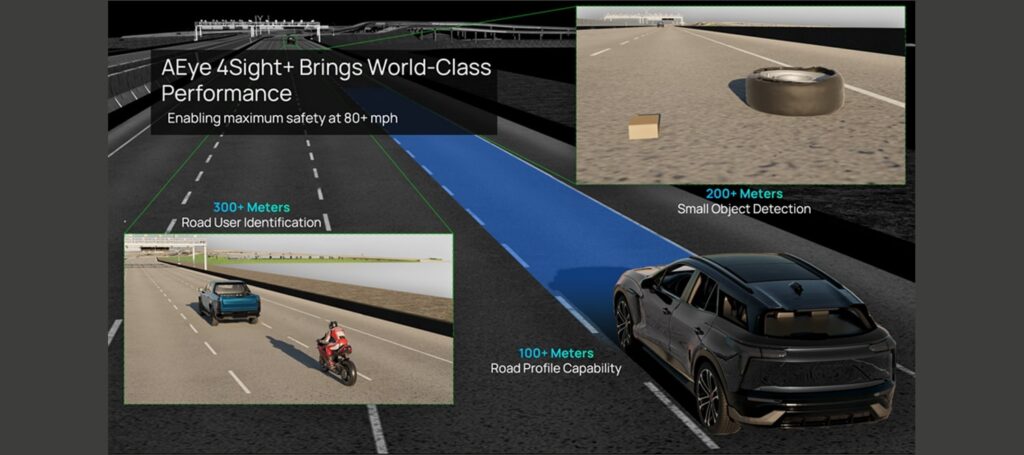

More resolution and greater range, made possible by new algorithms and calibration techniques, allow for safer driving because the vehicle can see things like bricks and tires from a farther distance (up to 200 meters), allowing more time for decision-making and alleviating the need to “slam on the brakes,” Fisch explained. “This is a critical capability for high-speed, hands-free highway driving,” he said. “4Sight+ detects road surfaces (asphalt and cement) up to 100 meters at highway speeds, even in direct sunlight and low-light conditions. It also allows vehicles to track vulnerable road users – pedestrians, motorcycles, and other vehicles – up to 300 meters.”

Phantom Braking

With 4Sight+, the company aims to help OEMs eliminate dangerous nuisances like phantom braking, which NHTSA defines as activating the automatic emergency braking system (AEB) without an actual roadway obstacle. “The problem is that cameras alone and even cameras and radar are not enough to prevent this issue, as shadows, road curvatures, faint lane markings, parked vehicles, and metallic structures can create misleading sensing data with increased false positives and negatives, resulting in unintended braking,” Fisch said.

Phantom braking or false positives may be triggered by a variety of conditions depending on the sensor systems employed by the vehicle, explained a NHTSA spokesperson over email. “As driver assistance systems continue to evolve to employ more effective software filtering, multi-sensor designs, and mitigation strategies, false positives have decreased,” they wrote.

Given that NHTSA recently proposed requiring AEB on all passenger cars and light trucks, it seems imperative researchers and designers focus heavily on reducing or eliminating braking errors. AEye with 4Sight+ is poised to do its part. “A comprehensive sensor set, inclusive of a software-definable LiDAR, which performs well at night, in all weather conditions with high resolution at long distances, provides better probability of detection with reduced false positives to help eliminate phantom braking in ADAS systems entirely,” Fisch said.

Addressing Hazardous Vehicle Cut-Ins

A bonus for Tier 1s developing LiDAR on the 4Sight+ platform is that is can now address hazardous vehicle cut-ins.

“Cut-in applications are not new in automotive,” Boulay said. “Such a use case is already taken into account by OEMs and Tier 1s in standard applications like adaptive cruise control and the automated emergency braking application, but at this time, it is based on camera and radar sensors.”

Dangerous vehicle cut-ins are among the most studied areas in ADAS and autonomous vehicle development and assessment. The landmark 1994 University of Indiana study on traffic safety found that human error was the cause of all lane change or merge crashes. The Indiana researchers concluded that 89 percent of these crashes were due to drivers failing to recognize the hazard. As technologists set about to mitigate cut-in risk with driver-assist applications, researchers in a 2019 SAE technical paper cast doubt on the ability of ADAS and automated vehicle systems to behave as well as humans in cut-in scenarios. Those and other researchers have defined and prioritized normal and dangerous cut-in scenarios to assist in designing and assessing predictive safety applications.

More recently, a 2022 NCAP report revealed that vehicle cut-ins account for 12 percent of potentially dangerous situations that an autonomous vehicle may encounter during lane keeping on a freeway and ranked the most dangerous scenario as when an automated vehicle’s lane is clear, but a vehicle suddenly cuts in from a congested lane – a scenario that requires quick and precise reactions to prevent a collision.

It seems clear that any improvement in predictive safety applications focused on cut-ins can positively affect crash statistics.

The LiDAR Market Ahead

Despite doubt about the future of LiDAR technology, OEMs and other major players are bringing new products with LiDAR to market. Daimler, Volvo, MobileEye, Honda, Waymo, Aurora, Kodiak Robotics, and others announced new products and LiDAR plans during CES 2023. For their part, AEye teamed with Continental at the show to demonstrate how Continental’s HRL 131’s long-range capabilities enable safety improvements and L3+ ADAS features in commercial and passenger vehicles. They’ve also obtained impressive funding, securing $90 million in private equity and approximately $254 million in public equity from top institutional and corporate investors.

“Automakers are on a fast track to deliver intuitive, reliable ADAS services that improve safety,” Fisch said. “Our architecture allows us to match their timeline and brings OEMs and our partners one step closer to achieving their mission of Vision Zero: zero fatalities, injuries, and accidents.”